January 30, 2026

Introduction

Cybersecurity discussions should start with business goals and end with technical controls. Today’s goal is to ensure that risk of Hedge Fund (or any business) wire transfer fraud is mitigated. Wires represent the preferred investor / broker / fund administrator instrument for moving money around. LOTS OF MONEY. Wire transfers are the lifeblood of investment operations. Whether you’re deploying capital to trading strategies, settling positions, or managing investor redemptions, your firm likely processes multiple wire transfers daily. For a hedge fund managing hundreds of millions—or billions—in assets, the stakes couldn’t be higher.

Yet many alternative investment firms operate their wire transfer processes with security controls designed for a previous era. That’s dangerous. In 2026, the threat landscape has fundamentally shifted. Sophisticated attackers no longer need to exploit forgotten passwords or poorly configured cloud servers. Instead, they’re using artificial intelligence to impersonate your CFO, your fund manager, or your operations director in real time.

This guide provides practical, implementable steps to secure wire transfer operations against modern threats—including the emerging risk of AI-powered voice and video impersonation that hedge funds haven’t adequately addressed.

The Real-World Cost of Wire Fraud

Consider what happened to a Hong Kong alternative investment firm in 2024. An employee received a Zoom call that appeared completely legitimate. Multiple executives were on the screen. The CEO’s voice was perfect. The request sounded routine. The employee authorized a transfer of $25.6 million.

Every person on that call was a deepfake. The entire meeting was synthetically generated using publicly available video recordings of the real executives from investor presentations and media appearances.

This wasn’t a sophisticated attack requiring weeks of preparation. Attackers trained their AI models using video footage already published on the company’s investor relations website. They generated the entire multi-person deepfake meeting in hours. The technology required just basic generative AI tools available to anyone with an internet connection.

For alternative investment firms, this isn’t hypothetical risk—it’s an emerging reality affecting financial institutions worldwide.

Why Hedge Funds Are High-Value Targets

Hedge funds present attractive targets for two reasons:

1. Scale and Velocity: Unlike retail banks processing routine transactions, hedge funds execute large-value transfers regularly. A successful $20-50 million fraud doesn’t require compromising hundreds of accounts—it requires compromising one authorization decision. That makes hedge funds 5-10x more valuable targets than institutional banking operations.

2. Operational Complexity: Alternative investment firms operate leaner than traditional financial institutions. Operations teams often juggle multiple roles. The CFO may authorize transfers based on verbal requests from the fund manager. Deal teams may request wire transfers across multiple jurisdictions. This operational flexibility—a feature that makes hedge funds competitive—creates security vulnerability.

Attackers understand these dynamics. They specifically target alternative investment firms because the risk-reward calculation favors fraud.

Part 1: Wire Transfer Fraud Fundamentals

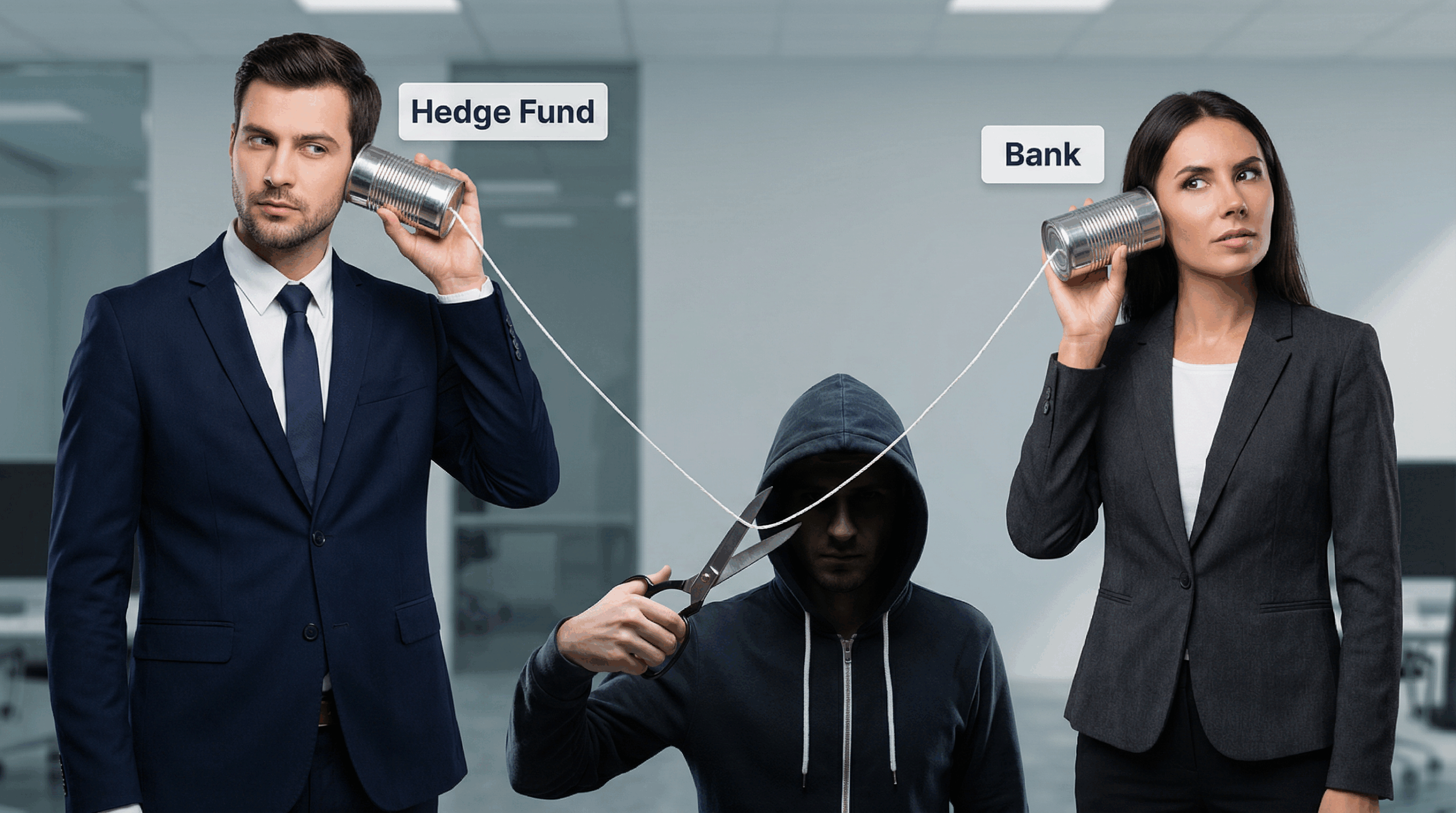

The Attack Vector: Business Email Compromise (BEC)

Before diving into AI-powered attacks, understand the baseline threat: Business Email Compromise. This attack succeeds despite being fundamentally simple:

- Attacker identifies a wire transfer authority (usually your CFO)

- Attacker sends a spoofed email requesting a transfer

- The email is crafted with urgency, legitimate context, and authority

- An employee, conditioned to move quickly, authorizes the transfer

- By the time verification happens, funds are already moving through intermediary banks

Research shows nearly 75% of attempted wire fraud involves email spoofing or compromised accounts. The method is proven and scalable.

The Evolution: AI-Powered Impersonation

Now add AI to this mix. Instead of an email, the attacker places a phone call using a synthetic voice that sounds identical to your CEO. Or they join a Zoom call using a deepfake video where they appear to be your CFO. The conversation can happen in real time. The attacker can answer questions. They can provide context about why the transfer is needed.

Why does this matter? Because all your existing controls—”verify by calling a known number,” “require a callback,” “have a conversation to confirm”—become ineffective when the attacker sounds and looks identical to the person you think you’re talking to.

Part 2: Understanding AI-Based Voice and Video Impersonation

Voice Cloning: How It Works. Modern voice cloning requires surprisingly little source material:

- Basic clone: 3 seconds of clear audio

- High-quality clone: 10-30 seconds capturing subtle vocal characteristics

- Training time: Minutes, using cloud computing resources

- Cost: Approximately $1.33 per generated deepfake audio sample

Where do attackers find source material? From the same places you do:

- Quarterly earnings call recordings on your investor relations website

- Conference presentations uploaded to YouTube

- CNBC interviews with your fund manager

- LinkedIn video posts from your operations team

- Company promotional videos

- Podcast appearances

Hedge fund executives routinely participate in these activities as part of normal business development and fundraising. Every conference panel, every investor call, every media appearance provides attackers with free training data.

Video Deepfakes: The Multimodal Threat

Video deepfakes represent an even more sophisticated threat. Advanced generative AI can now create:

- Synchronized facial movements matching speech patterns

- Natural body language and hand gestures

- Lighting and background consistency with the person’s actual environment

- Real-time rendering during live video calls

The 2024 incident targeting a financial services firm demonstrates this threat’s maturity. Attackers generated a complete Zoom meeting with three synthetic executives. The deepfakes maintained consistent eye contact, appropriate reaction timing, and natural conversation flow. A human observer couldn’t distinguish the synthetic participants from genuine attendees.

Why Existing Biometric Authentication Fails

Many organizations rely on voice recognition for authentication. “Voice biometric systems guarantee security,” they claim. That confidence is now obsolete.

Modern voice cloning can bypass voice recognition systems that are supposed to verify identity. The synthetic audio is indistinguishable enough to fool the algorithms. Similarly, video-based facial recognition systems can be defeated by deepfake video feeds.

If your authentication strategy depends on “just verify via video call,” you have a critical vulnerability.

Part 3: Practical Wire Transfer Security Controls

Control 1: Segregation of Duties and Dual Authorization

The Practice: Require that no single individual can authorize a wire transfer above a defined threshold. Define thresholds based on your firm’s risk tolerance:

- Transfers $1-5 million: Authorizer + Approver (two different people)

- Transfers $5-20 million: Authorizer + Senior Approver + Compliance Review (three different people)

- Transfers above $20 million: Add a fourth independent review level – of course a really small fund might run out of people at this point!

Why This Works:

This control prevents a single successful deepfake attack from resulting in fund loss. Even if an attacker compromises the ability to impersonate your CFO convincingly, they still need to manipulate multiple people independently.

Implementation:

- Document authorization thresholds in writing

- Establish clear role definitions (only CFO can authorize, only COO can approve, only CEO can release, etc.)

- Maintain audit logs showing who authorized each transfer

- Rotate approval authorities periodically (reduces targeting effectiveness)

Hedge Fund Specific Consideration: In smaller alternative investment firms, this may require operational process changes. The fund manager currently authorized transfers directly from their verbal request. Implement an intermediate approval step: trading manager requests → operations manager submits to CFO → CFO requests approval from COO → COO authorizes. This adds 15-30 minutes to routine transfers but stops catastrophic fraud.

Control 2: Independent Verification Using Pre-Established Channels

The Practice: When a wire transfer authorization request is received, verify it using communication channels established before any suspicious request occurred. Never use contact information from the suspicious communication itself.

Specific Implementation:

Your operations team has a contact list with phone numbers and email addresses for executives. When a wire authorization request arrives, use the pre-existing contact information to verify. Call the CFO’s office number (from your directory, not from the email requesting the transfer). Use internal Slack channels or Microsoft Teams channels that existed before the request.

Real-World Example:

Employee receives email from “CFO@yourfund.com” requesting $10 million wire to a new vendor account. The email includes a new bank account number and routing information.

✓ Correct Response: Put down the email. Use the CFO’s office number (from the company directory, not from any recent communication) and call to verify. “I received a request to wire $10 million to [bank account]. Did you make this request?” Wait for direct confirmation using a conversation that includes verbal confirmation from the actual CFO.

✗ Incorrect Response: Call the phone number provided in the email. Call any number recently texted to you. Reply to the email asking the sender to confirm. All of these routes likely reach the attacker.

Why This Defeats Deepfake Attacks:

A deepfake attack requires real-time interaction. If the attacker is generating synthetic video and audio on the fly, they can respond to questions. But they can’t know which pre-established phone number or communication channel you’ll use. The attacker has no way to intercept a call to your CFO’s office number because they didn’t know you were going to call it.

This control remains effective against AI impersonation because it breaks the attacker’s ability to maintain the deception across multiple independent verification channels.

Control 3: Mandatory Waiting Periods for Unusual Transfers

The Practice: Establish a rule: Even after all authorizations are complete, unusual or high-value wire transfers must have a mandatory waiting period before execution.

Define “unusual” as:

- New beneficiary accounts (first time wiring to this account)

- Deviations from established payment patterns

- Non-standard transfer timing (middle of night, weekend, holidays)

- Requests that bypass normal procedures

Waiting Period Duration:

- Minimum 30 minutes for routine transfers

- 2-4 hours for unusual transfers

- 24 hours for first-time transfers to new jurisdiction accounts

Why This Works:

Attackers create urgency. “The market’s closing in 30 minutes, we need to deploy this capital now.” “The redemption request came in this afternoon, we have to wire by EOD.” Pressure tactics are fundamental to social engineering attacks.

A mandatory waiting period:

- Eliminates the urgency that attackers rely on

- Provides time for additional verification

- Allows executives to have separate conversations confirming the request

- Gives you time to contact the counterparty through independent channels

Real-World Impact:

In the Hong Kong deepfake case, a 24-hour waiting period would have allowed the real CFO to discover the fraudulent transfer before funds left the organization’s control. By the time the actual executives learned about the “transaction they never authorized,” money had already been moved through intermediary accounts.

Control 4: Challenge Questions and Rotating Secrets

The Practice: Establish rotating challenge questions between executives and operations staff that reference specific, verifiable information only genuine participants would know.

Examples:

- “What was the name of the client we onboarded in Q3 2025?” (Only your actual executives know)

- “What was the amount of capital we deployed in the September trading strategy?” (Verified from internal systems)

- “Which portfolio manager presented at the Miami conference last month?” (Verifiable fact)

Critical Requirement: Rotate these questions monthly. If an attacker observes one question-answer pair, they can’t use that same pair in future attacks.

Why This Defeats Deepfakes:

Deepfake attacks based on voice cloning or video synthesis can’t access your internal operational knowledge. An attacker can reproduce your CEO’s voice perfectly, but they can’t answer specific questions about internal deals, client names, or strategic decisions without additional intelligence gathering.

This control requires the attacking deepfake to be integrated with separately obtained operational intelligence—significantly raising the bar for attack success.

Control 5: Encryption and Secure Communication Channels

The Practice: Establish end-to-end encrypted communication channels specifically for wire transfer authorizations:

- Microsoft Teams: Use Teams channels with encryption enabled, not email

- WhatsApp Business: Establish a verified WhatsApp group with all wire authorization participants

- Schleubage/Signal: Use encrypted messaging apps for sensitive communications

- Unique challenge protocol: Establish a pattern that non-participants can’t easily replicate

Message Protocol Example:

Wire authorization requests must be submitted through the established encrypted channel including:

- Beneficiary name (from existing vendor database)

- Beneficiary bank account (from existing records, not new accounts)

- Amount

- Business purpose (one-sentence description)

- Timestamp

- Authorizer digital signature (if using enterprise encryption)

Messages received through other channels automatically trigger enhanced verification.

Why This Works:

Encrypted channels create a separate communication pathway that deepfake phone calls and video meetings can’t access. An attacker generating synthetic audio and video still needs to communicate the wire transfer request. If your firm’s policy requires encrypted channel communication, the attacker must either:

- Compromise the encrypted channel (significantly harder)

- Use a different communication method (triggering enhanced verification procedures)

Control 6: AI-Based Anomaly Detection for Transactions

The Practice: Implement transaction monitoring systems that use machine learning to identify unusual patterns:

- New beneficiary accounts

- Transfers to jurisdictions where your firm has no active business

- Amounts deviating significantly from historical patterns

- Timing patterns inconsistent with operational norms

- Velocity patterns (multiple transfers to same beneficiary in short timeframe)

Integration:

These systems work alongside human authorization, not as replacement. When the system flags an unusual pattern, it automatically escalates the transaction for additional review before execution.

Vendor Options:

Financial institutions typically use platforms like Outseer, FICO, or SAS for transaction monitoring. These solutions integrate with banking platforms and wire processing systems.

Why This Matters:

Transaction monitoring provides a safety net. Even if a human authorization process is compromised by a convincing deepfake, the transaction monitoring system flags the unusual pattern independently.

Consider the Hong Kong case: A $25.6 million wire to a new account in an unfamiliar jurisdiction would have triggered multiple anomaly flags. A human might be fooled by a perfect deepfake video call, but the transaction monitoring system would have automatically escalated the transfer for additional review.

Control 7: Vendor Verification and Account Whitelisting

The Practice: Maintain a whitelist of approved vendors and their associated bank accounts. New wire transfer requests to accounts not on the whitelist require special handling.

Process:

- Vendor Onboarding: When adding a new vendor, verify banking information through independent channels:

- Call the vendor’s main business number (not from their email)

- Request they provide bank account information on official letterhead

- Cross-reference with business registration documents

- Use third-party vendor verification services

- Account Whitelisting: Once verified, add the vendor and account to approved list

- Request Process: Wire transfer requests can only reference approved vendors and approved accounts. Requests for new vendors or new accounts follow enhanced approval procedures

Real-World Protection:

Business Email Compromise often involves redirecting payments to attacker-controlled accounts. Instead of wiring to “Acme Trading Solutions” at Chase Bank, the attacker sends an email saying “Our Acme Trading account moved to a new bank at [attacker’s account].”

Vendor account whitelisting stops this attack immediately. The system rejects the request because the account doesn’t match the approved vendor record, triggering manual review and verification.

Part 4: Defending Against Deepfake-Specific Threats

Deepfake Threat Assessment. Before implementing deepfake-specific controls, assess your organization’s vulnerability:

High Risk if:

- Your executives are public figures (conference speakers, podcast guests)

- Your fund has active investor relations (frequent video presentations)

- Your executives participate in media interviews or podcasts

- You operate in high-profile strategies (crypto, ESG, climate tech)

- Recent competitor targeting or activist investor attention

Medium Risk if:

- Your executives maintain professional social media presence

- You publish earnings calls or investor updates online

- Your executives speak at industry conferences

Lower Risk if:

- Your organization maintains low public profile

- Limited executive media appearances

- Minimal published video content

Be honest in this assessment. Most hedge funds have moderate-to-high deepfake risk given typical business development activities.

Control 8: AI-Based Voice and Video Detection

The Practice: Implement real-time deepfake detection systems for voice and video communications related to wire transfers. These systems analyze audio and video in real time to identify synthetic content.

Detection Methods:

Modern deepfake detection analyzes:

- Micro-expressions (facial movement inconsistencies)

- Eye blink patterns (irregular or absent)

- Audio artifacts (voice deepfakes sometimes reveal training artifacts)

- Temporal inconsistencies (face-to-voice synchronization)

- Background and lighting anomalies

Limitations to Understand:

Current detection systems are effective but not perfect. They work best when:

- Deepfakes are generated quickly (lower quality)

- Detection systems are recent (trained on current deepfake generation methods)

- Multiple detection vectors are combined (audio + video + behavioral)

Limitations include:

- Highly sophisticated deepfakes (requiring significant time/resources) may evade detection

- Detection systems require regular updates as deepfake technology evolves

- Some detection methods create false positives (legitimate video calls sometimes flagged)

Practical Implementation:

Rather than relying solely on detection, use it as an additional control layer:

- Phone call comes in claiming to be your CFO requesting wire

- System analyzes voice in real time for deepfake artifacts

- If deepfake is detected → automatic escalation to senior staff

- If no deepfake detected → proceed with normal verification controls

- Challenge questions answered correctly → authorization proceeds

This layered approach means deepfake detection works as an additional safety net rather than the primary control.

Vendor Options:

- Respeecher (voice deepfake detection)

- Deepfake detection APIs (Microsoft Azure, Amazon Rekognition)

- Specialized financial services solutions (Clarity, BrightSide AI)

Control 9: Real-Time Verification Protocols for Video Communications

The Practice: If executives must use video calls for wire transfer authorization, establish protocols that detect deepfakes in real time:

Protocol 1: Challenge-Response with Screen Sharing

During video call:

- Operations manager asks challenge question (reference to internal knowledge)

- CFO provides answer verbally

- CFO shares screen showing live calendar or internal email to confirm identity

- CFO performs an unexpected action (check a specific email, open a specific document, navigate to internal system)

This requires the deepfake to not only have the CFO’s voice and appearance but also access to the CFO’s digital systems—a much higher bar.

Protocol 2: Third-Party Verification Call

During suspicious video call:

- Don’t authorize based on the video call alone

- End the video call

- Use pre-established contact to reach the executive directly: “I’m ending this call. I’m going to call you on your office number to verify this request independently.”

- Call using your directory information

- Verify the request in a completely separate conversation

This defeats any deepfake attack because the attacker can’t maintain the deception across multiple independent communication channels.

Protocol 3: Time-Based Challenges

When deepfake video calls are particularly convincing, use time-based verification:

- Executive makes authorization request via video

- You acknowledge the request but do not authorize

- Statement: “I’ll need to verify this request separately. Call your assistant and have them email me confirmation from your corporate email account. We’ll process the transfer in 2 hours.”

This forces the attacker to either:

- Maintain the deepfake deception through additional independent communications (very difficult)

- Abandon the attack

Most attackers will abandon because the additional verification steps exceed their capability.

Control 10: Employee Training for Deepfake Recognition

The Practice: Most employees have never seen a deepfake in action. They don’t understand how convincing the technology is. Training should include:

Scenario-Based Simulations:

Rather than generic “don’t trust suspicious emails,” provide realistic deepfake simulations:

- Play recordings of voice deepfakes and ask employees to identify them (1-2 out of 10 will notice artifacts)

- Show deepfake video excerpts and ask if they appear authentic (most will say yes)

- Conduct simulated deepfake “vishing” (voice phishing) calls to see who follows verification protocols

- Run tabletop exercises: “Your CEO just called from Zoom requesting an urgent $15 million wire. Walk me through how you’d verify this request.”

Key Learning Points:

- Source Material is Everywhere: Your executives’ voices and faces are public. Attackers can access training data without compromising your systems.

- Real-Time Response is Possible: Modern AI can generate synthetic speech and video in real time with minimal latency. “Having a conversation” with a deepfake is possible.

- Detection is Hard: Humans can’t reliably distinguish deepfakes from authentic media. Our intuition about what’s “real” is unreliable against synthetic media created by advanced AI.

- Verification Protocols Trump Intuition: Following defined verification procedures (callback to known number, challenge questions, waiting periods) works even when the deepfake is convincing.

- Pressure and Urgency Are Attack Markers: If the request creates time pressure and bypasses normal procedures, it warrants heightened skepticism.

Training Frequency:

Conduct deepfake-specific training quarterly. Annually isn’t sufficient given how rapidly the technology evolves.

Measurement:

Track:

- Percentage of employees who correctly identify deepfakes during simulations

- Percentage following proper verification protocols during simulated vishing calls

- Time required to recognize anomalies in synthetic media

- Post-training confidence in identifying deepfake attacks

Use these metrics to identify staff needing additional training.

Part 5: Regulatory Developments and Emerging Requirements

2026 Nacha Rule Changes for ACH Monitoring: For firms using ACH as part of their wire transfer ecosystem, the new 2026 Nacha rules require proactive fraud monitoring.

Phase 1 (March 20, 2026): Large senders and receivers must implement risk-based monitoring for both unauthorized AND “false pretenses” payments.

Phase 2 (June 2026): All ACH participants must have equivalent monitoring.

What This Means:

You must identify payments that appear technically authorized but are sent under false pretenses (e.g., someone convinced the wire authorizer through a deepfake that the transfer was legitimate).

This requires:

- Behavioral monitoring (comparing to historical patterns)

- Anomaly detection (identifying unusual transfers)

- Documented risk assessment and response procedures

Even if your firm primarily uses wire transfers rather than ACH, Nacha rules indicate regulatory direction: fraud detection must evolve beyond “authorized vs. unauthorized” to “authorized but potentially fraudulent based on context.”

FinCEN Guidance on Deepfakes

In November 2024, FinCEN issued an alert on fraud schemes involving deepfake media. Key points:

- Financial institutions must consider deepfakes in their fraud detection and reporting

- Deepfakes may bypass standard verification procedures

- Suspicious activity reporting should include deepfake indicators

- Enhanced due diligence appropriate for accounts showing deepfake fraud indicators

For hedge funds, this guidance signals that regulators expect proactive defense against deepfake-based fraud, not reactive response.

Part 6: Implementation Roadmap

Implementing all controls simultaneously is unrealistic. Use this phased approach:

Phase 1: Foundation

Priority Controls:

- Document dual authorization thresholds

- Establish segregation of duties for wire transfers

- Create vendor whitelist and account verification procedures

- Establish pre-approved communication channels for wire requests

Resource Required: 40-60 hours (primarily process documentation) – will vary based on fund operational details

Expected Outcome: Basic controls addressing traditional BEC fraud

Phase 2: Deepfake Hardening

Priority Controls:

- Implement challenge questions for executive verification

- Establish encrypted communication requirements for wire requests

- Create waiting period policy for unusual transfers

- Conduct initial deepfake awareness training

Resource Required: 20-30 hours (training development, policy creation) – will vary based on fund operational details

Expected Outcome: Organizational awareness of deepfake threat; initial defenses deployed

Phase 3: Advanced Detection

Priority Controls:

- Implement transaction monitoring/anomaly detection system

- Integrate deepfake detection tools for voice/video calls

- Develop real-time verification protocols for video calls

- Establish quarterly deepfake training program

Resource Required: 30-50 hours + vendor integration (ongoing) – will vary based on fund operational details

Estimated Cost: $5,000-15,000 annually for detection software

Expected Outcome: Multi-layered defense combining human controls with AI-based detection

Phase 4: Continuous Improvement (Ongoing)

Activities:

- Quarterly review of attempted wire fraud incidents

- Semi-annual updating of verification protocols

- Ongoing monitoring of deepfake technology evolution

- Annual third-party audit of wire transfer controls

Resource Required: 5-10 hours quarterly

Conclusion: Making the Business Case

Securing wire transfers requires investment. You’ll implement additional procedures. Authorizations will take longer. Your operations team will need training. You may need to purchase monitoring systems.

Is it worth it? For a $500 million fund, a single $25 million wire fraud loss represents 5% of AUM. Beyond the immediate financial loss, consider:

- Investor confidence loss: Would your limited partners trust you with capital after a $25 million fraud?

- Regulatory scrutiny: State and federal regulators would likely mandate corrective action

- Opportunity cost: Capital you should be deploying sits in bank accounts during investigation

- Operational disruption: Staff time spent on fraud response and recovery

Compare this to the cost of Phase 1-3 implementation: $30,000-50,000 in software and consulting + 100-150 hours of staff time. For a fund of any meaningful size, the ROI is obvious.

The stronger argument, though, is that wire transfer fraud is no longer a theoretical risk—it’s an industry-specific threat currently targeting alternative investment firms. Your competitors who haven’t implemented these controls are the vulnerable ones, not the ones making prudent investments in control environments.

The technology exists to defeat voice cloning and deepfake attacks. The controls that work are operationally feasible. The investment is proportional to the risk.

The only question is whether you’ll implement these defenses before or after your firm experiences attempted wire fraud.

References

Clarity AI. (2025). “$25M Deepfake CEO Scam Shakes Hong Kong Firm.” Retrieved from https://www.getclarity.ai/ai-deepfake-blog

BrightSide AI. (2025). “Deepfake CEO Fraud: $50M Voice Cloning Threat to CFOs.” Retrieved from https://www.brside.com/blog/deepfake-ceo-fraud-50m-voice-cloning-threat-cfos

FS-ISAC. (2024). “Deepfakes in the Financial Sector: Understanding the Threats, Managing the Risks.” Retrieved from https://www.fsisac.com/hubfs/Knowledge/AI/DeepfakesInTheFinancialSector-UnderstandingTheThreatsManagingTheRisks.pdf

IBM. (2024). “How a New Wave of Deepfake-Driven Cyber Crime Targets Businesses.” Retrieved from https://www.ibm.com/think/insights/new-wave-deepfake-cybercrime

DC.gov. (2024). “Artificial Intelligence (AI) and Investment Fraud.” Retrieved from https://disb.dc.gov/page/artificial-intelligence-ai-and-investment-fraud

Fortinet. (2024). “How Deepfake AI Is Transforming Cybersecurity.” Retrieved from https://www.fortinet.com/resources/cyberglossary/deepfake-ai

IBM. (2024). “How a New Wave of Deepfake-Driven Cyber Crime Targets Businesses.” Retrieved from https://www.ibm.com/think/insights/new-wave-deepfake-cybercrime

Clarity AI. (2025). “$25M Deepfake CEO Scam Shakes Hong Kong Firm.” Retrieved from https://www.getclarity.ai/ai-deepfake-blog

Outseer. (2025). “2026 Nacha Rule Change: Proactive Fraud Monitoring of ACH Now a Requirement.” Retrieved from https://www.outseer.com/blog/2026-nacha-rule-change-proactive-fraud-monitoring-requirement